Many of our research projects are in one of the following general themes. Note that this page is still being updated to include all publications.

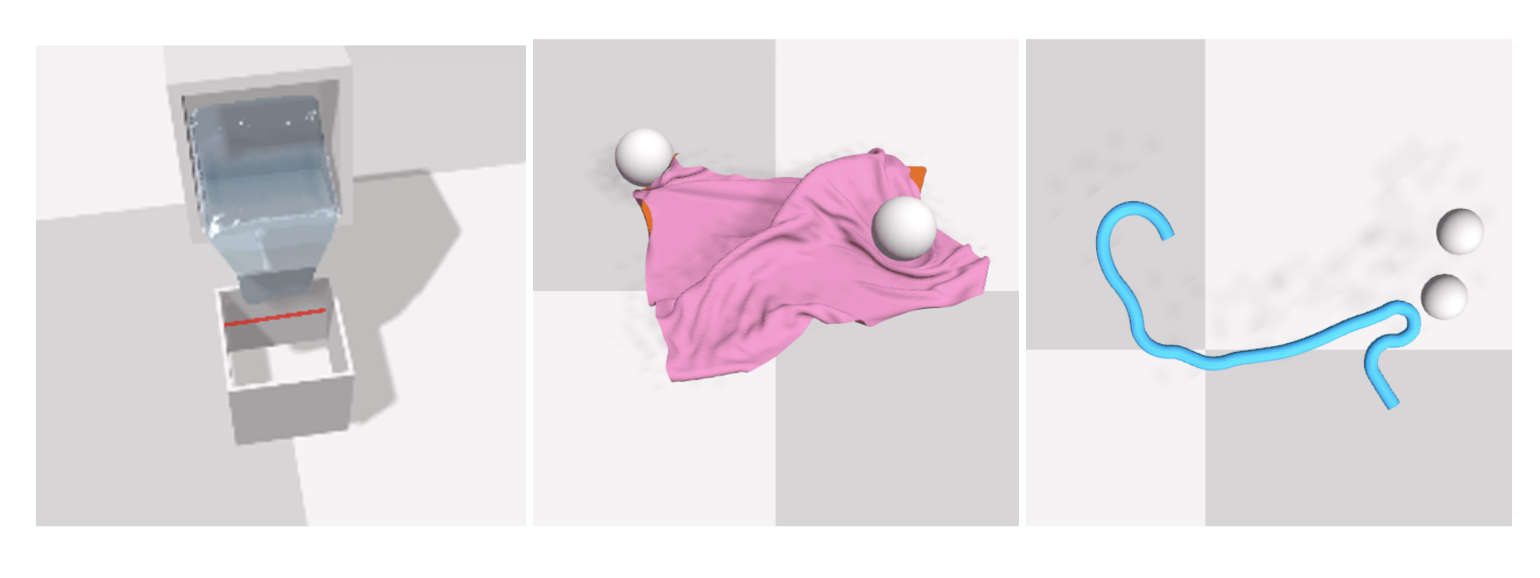

Deformable / Non-Rigid Object Manipulation

Deformable objects are challenging from both a perceptual and dynamic perspective: a crumpled cloth has many self-occlusions and its configuration is hard to infer from observations; further, the dynamics of a cloth are complex to model and incorporate into planning algorithms. We develop algorithms to handle deformable object manipulation tasks, such as cloth, liquids, dough, and articulated objects.

Relevant Publications

3D Affordance Reasoning for Object Manipulation

In order for a robot to interact with an object, the robot must infer its “affordances”: how the object moves as the robot interacts with it and how the object can interact with other objects in the environment. We develop robot perception algorithms that learn to estimate these affordances and then use such inferences to learn to manipulate objects to achieve a task.

Relevant Publications

Multimodal Learning

Robots should use all of the sensors available to them, such as depth, RGB, and tactile data. We have developed methods to intelligently integrate these sensor modalities.

Relevant Publications

|

Force-Modulated Visual Policy for Robot-Assisted Dressing with Arm Motions

Conference on Robot Learning (CoRL), 2025

|

|

Force Constrained Visual Policy: Safe Robot-Assisted Dressing via Multi-Modal Sensing

Robotics and Automation Letters (RAL), 2024

|

|

Learning to Singulate Layers of Cloth based on Tactile Feedback

International Conference on Intelligent Robots and Systems (IROS), 2022 -

|

|

PLAS: Latent Action Space for Offline Reinforcement Learning

Conference on Robot Learning (CoRL), 2020 -

|

|

Cloth Region Segmentation for Robust Grasp Selection

International Conference on Intelligent Robots and Systems (IROS), 2020

|

|

Multi-Modal Transfer Learning for Grasping Transparent and Specular Objects

Robotics and Automation Letters (RAL) with presentation at the International Conference of Robotics and Automation (ICRA), 2020

|

Active Perception

Rather than statically observing a scene, robots can take actions to enable them to better perceive a scene, known as “active perception.”

Relevant Publications

|

Learn from What We HAVE: History-Aware VErifier that Reasons about Past Interactions Online

Conference on Robot Learning (CoRL), 2025

|

|

FlowBotHD: History-Aware Diffuser Handling Ambiguities in Articulated Objects Manipulation

Conference on Robot Learning (CoRL), 2024

|

|

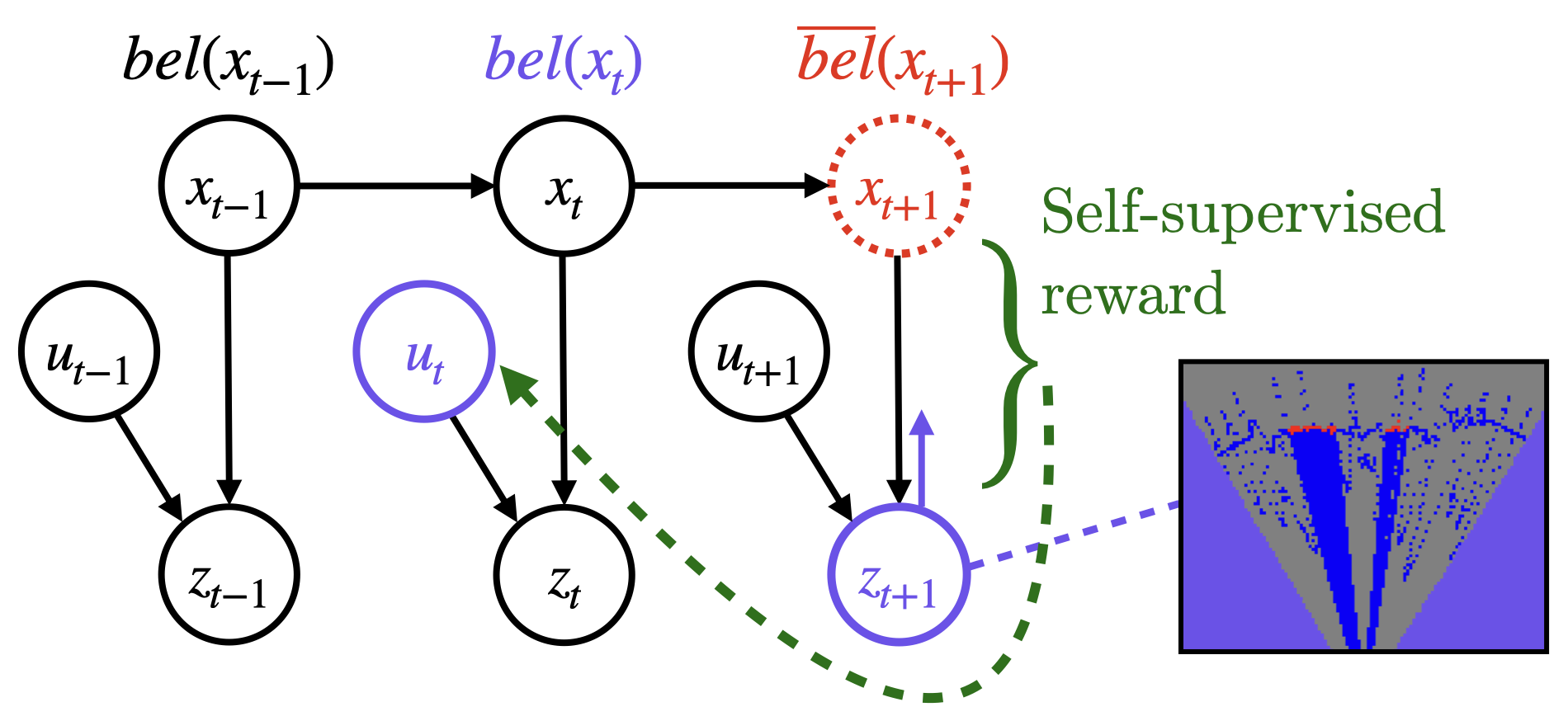

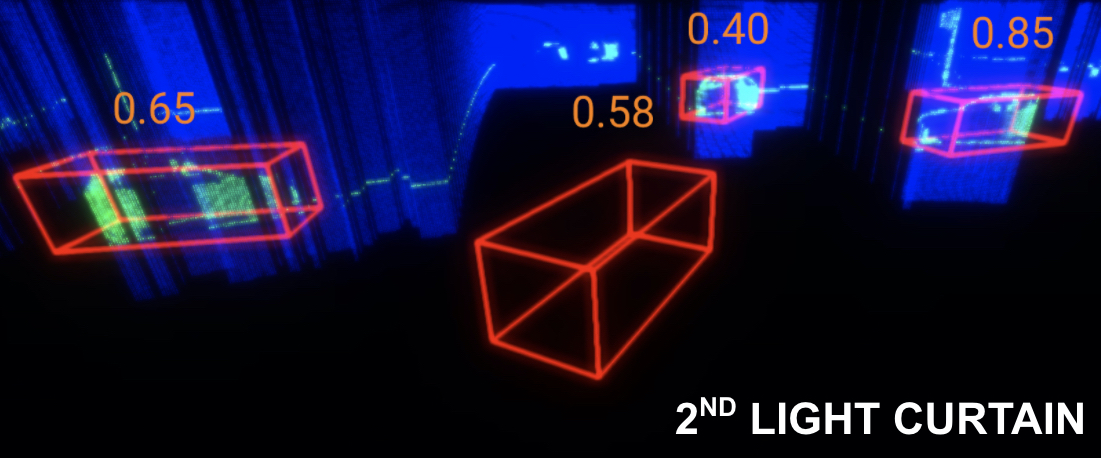

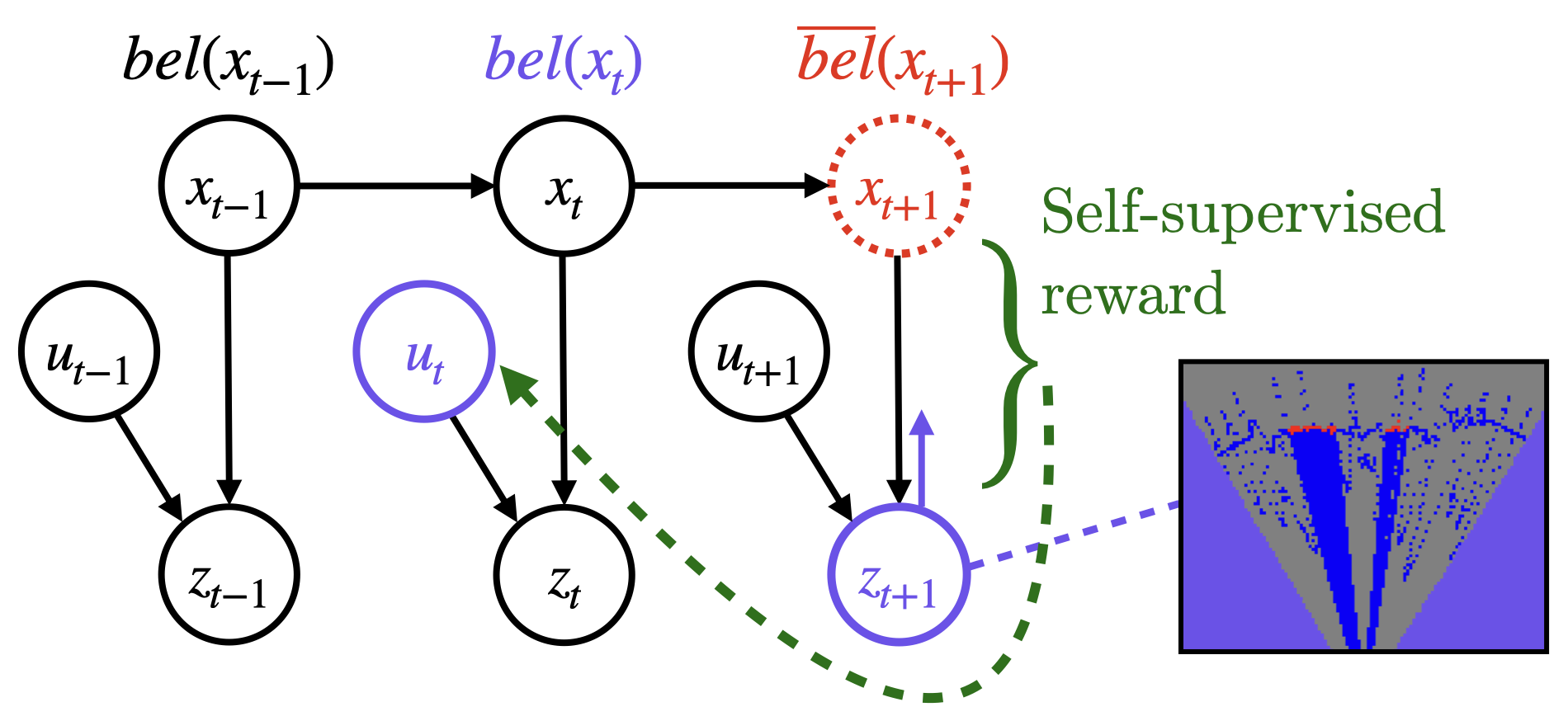

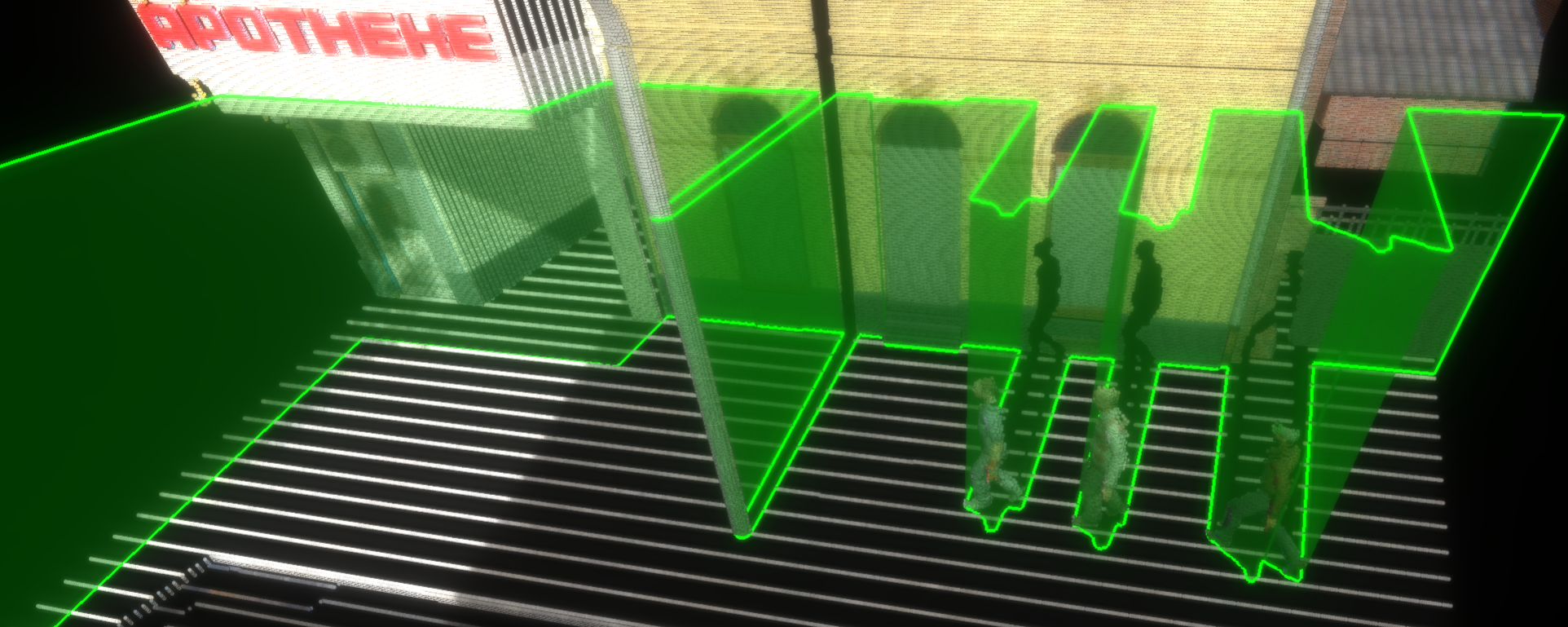

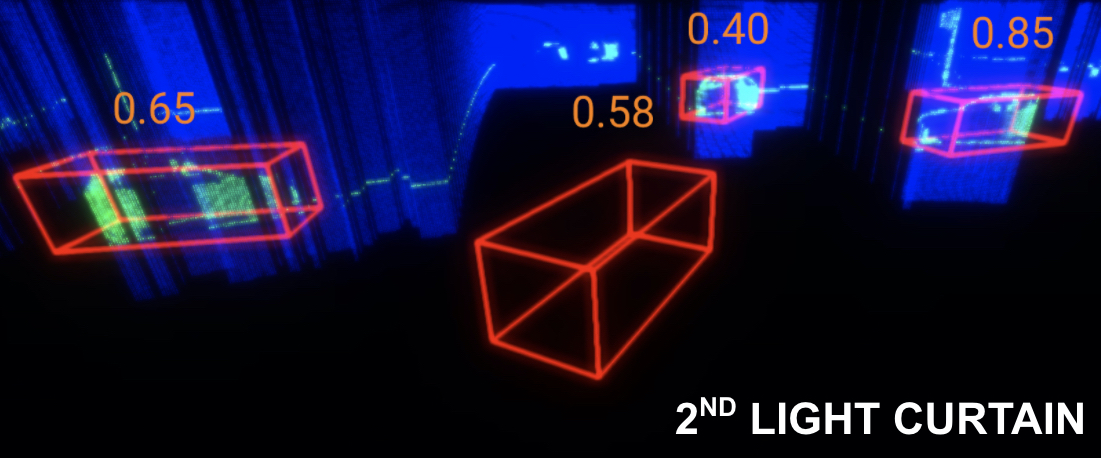

Active Velocity Estimation using Light Curtains via Self-Supervised Multi-Armed Bandits

Robotics: Science and Systems (RSS), 2023

|

|

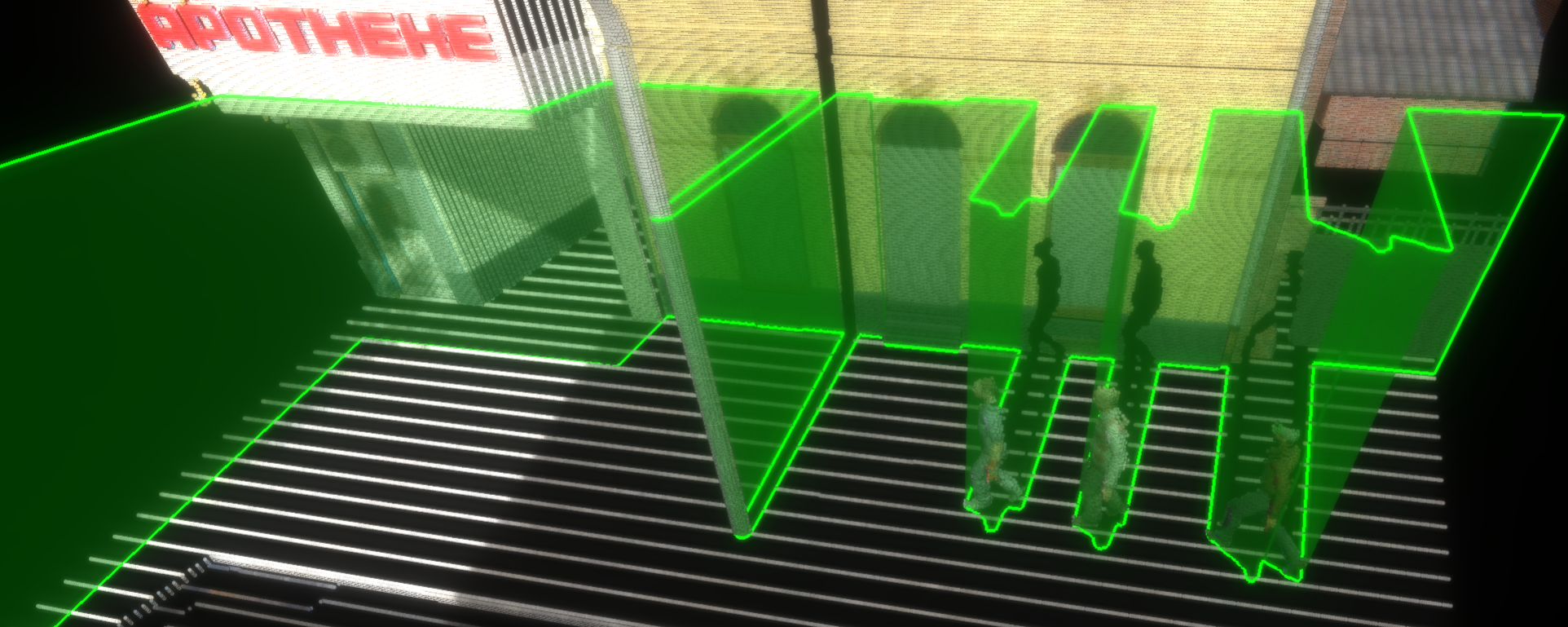

Active Safety Envelopes using Light Curtains with Probabilistic Guarantees

Robotics: Science and Systems (RSS), 2021

|

|

Exploiting & Refining Depth Distributions with Triangulation Light Curtains

Conference on Computer Vision and Pattern Recognition (CVPR), 2021

|

|

Active Perception using Light Curtains for Autonomous Driving

European Conference on Computer Vision (ECCV), 2020 -

|

Self-Supervised Learning for Robotics

Rather than relying on hand-annotated data, self-supervised learning can enable robots to learn from large unlabeled datasets.

Relevant Publications

|

Active Velocity Estimation using Light Curtains via Self-Supervised Multi-Armed Bandits

Robotics: Science and Systems (RSS), 2023

|

|

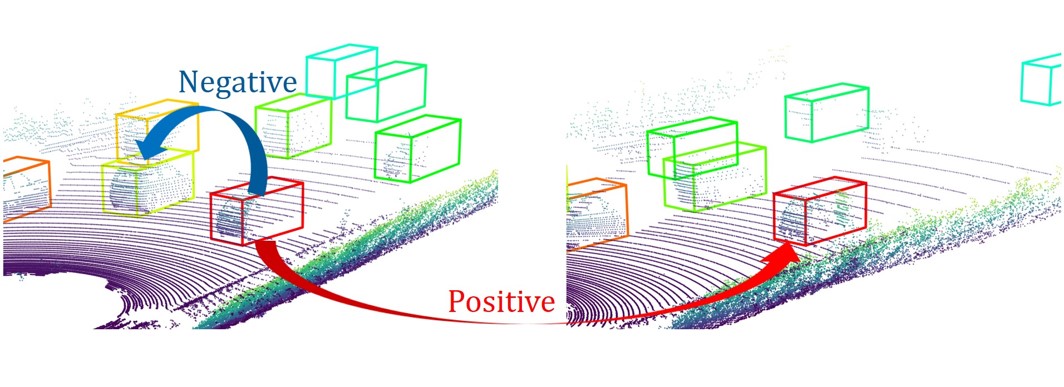

Point Cloud Forecasting as a Proxy for 4D Occupancy Forecasting

Conference on Computer Vision and Pattern Recognition (CVPR), 2023

|

|

Self-supervised Cloth Reconstruction via Action-conditioned Cloth Tracking

International Conference on Robotics and Automation (ICRA), 2023

|

|

Differentiable Raycasting for Self-supervised Occupancy Forecasting

European Conference on Computer Vision (ECCV), 2022

|

|

Self-supervised Transparent Liquid Segmentation for Robotic Pouring

International Conference of Robotics and Automation (ICRA), 2022

|

|

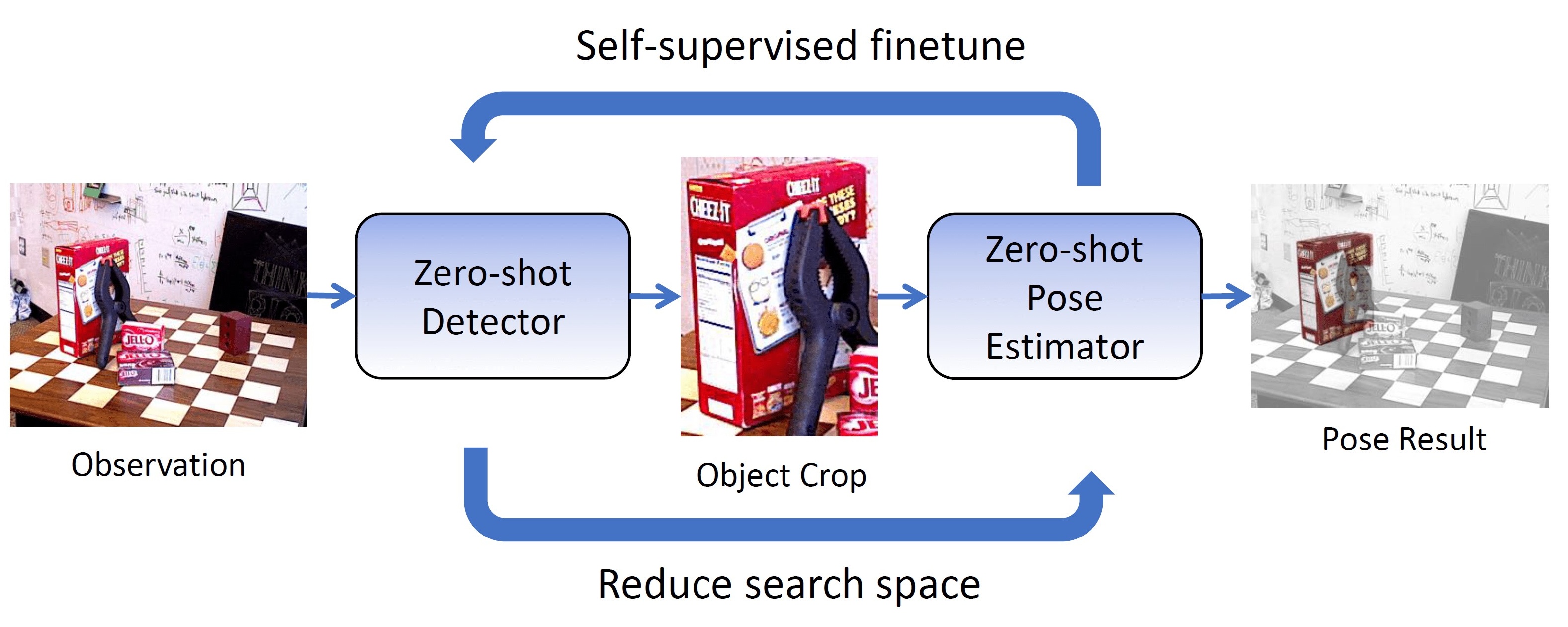

OSSID: Online Self-Supervised Instance Detection by (and for) Pose Estimation

Robotics and Automation Letters (RAL) with presentation at the International Conference of Robotics and Automation (ICRA), 2022

|

|

Self-Supervised Point Cloud Completion via Inpainting

British Machine Vision Conference (BMVC), 2021 -

|

|

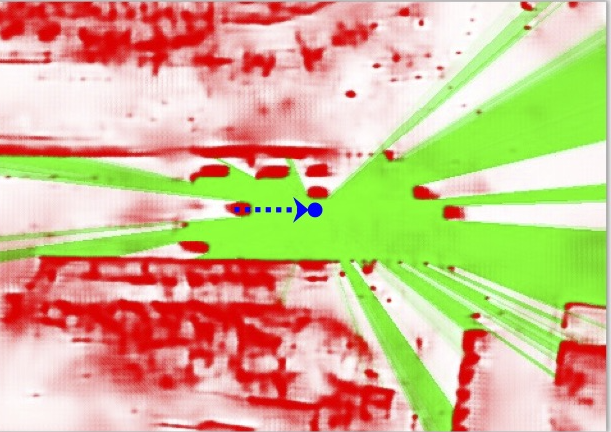

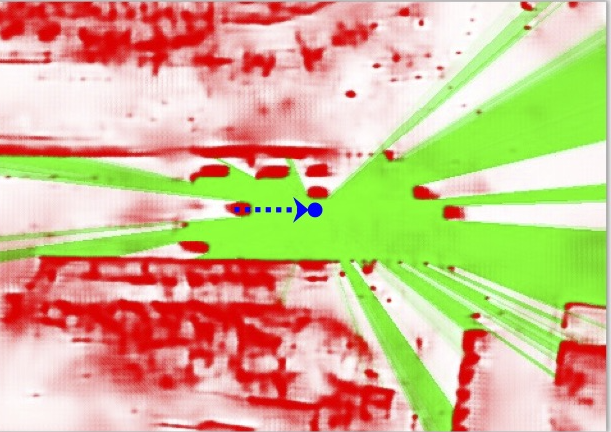

Safe Local Motion Planning with Self-Supervised Freespace Forecasting

Conference on Computer Vision and Pattern Recognition (CVPR), 2021

|

|

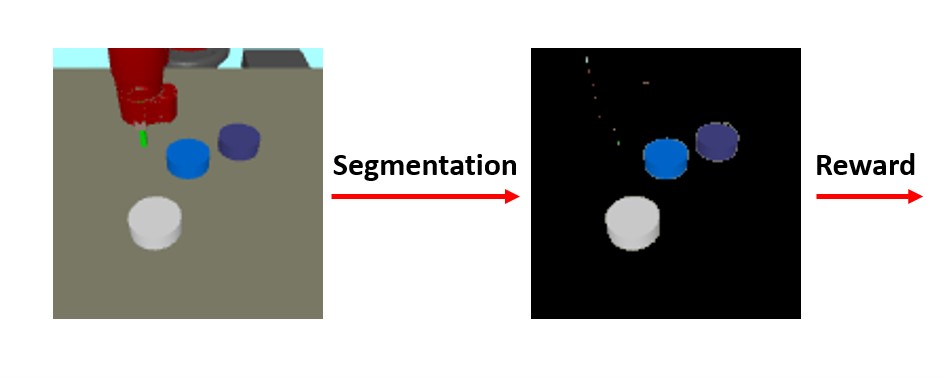

Visual Self-Supervised Reinforcement Learning with Object Reasoning

Conference on Robot Learning (CoRL), 2020

|

|

Uncertainty-aware Self-supervised 3D Data Association

International Conference on Intelligent Robots and Systems (IROS), 2020

|

|

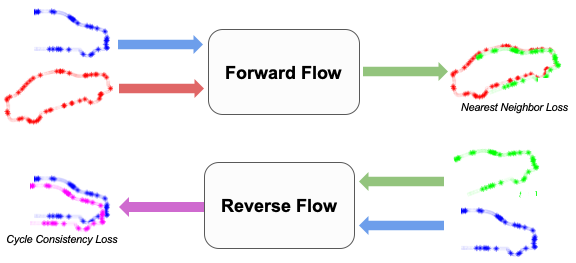

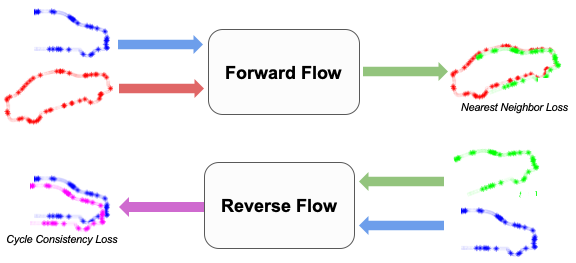

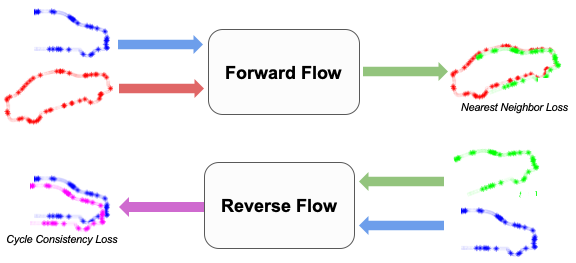

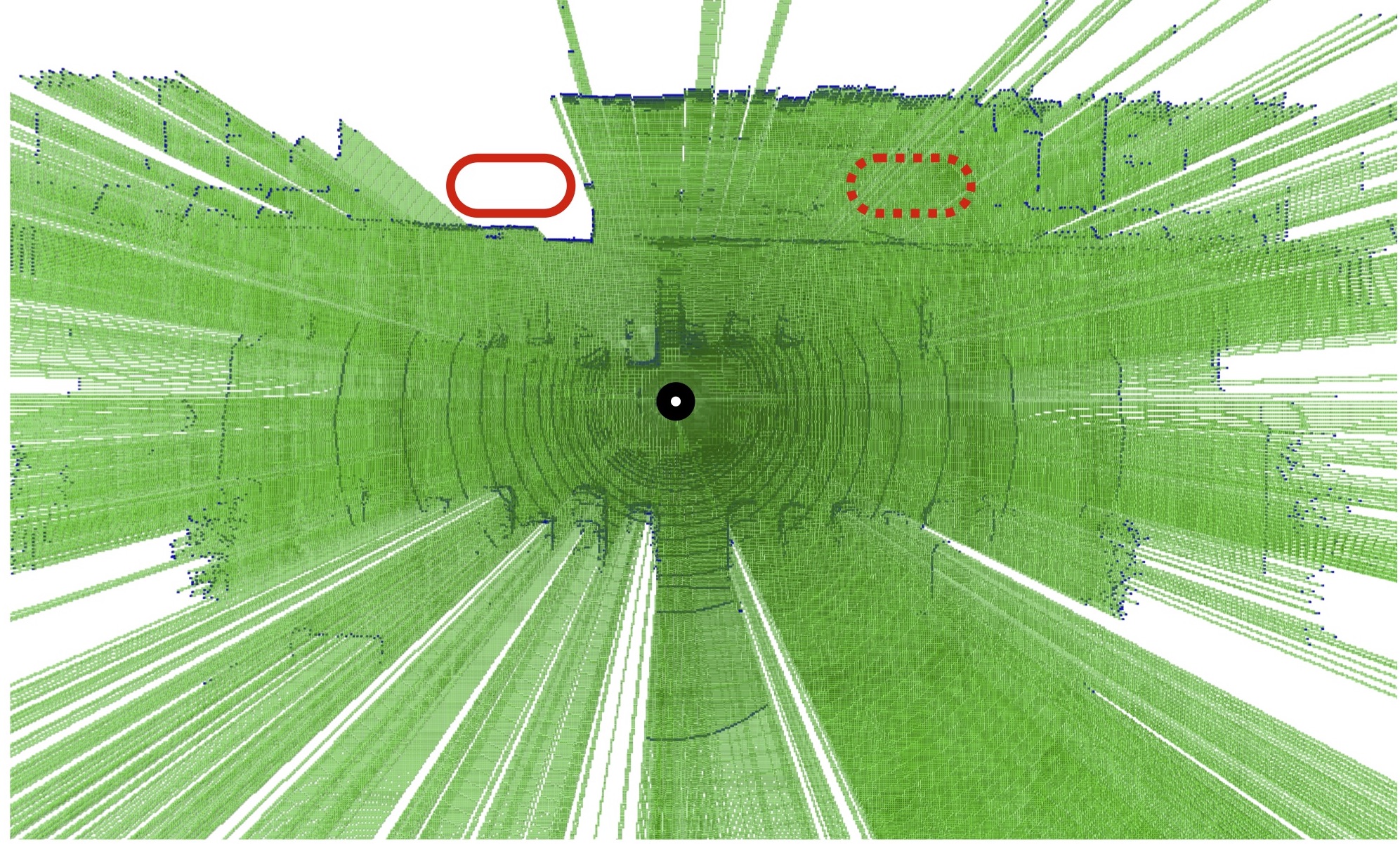

Just Go with the Flow: Self-Supervised Scene Flow Estimation

Conference on Computer Vision and Pattern Recognition (CVPR), 2020 -

|

Previous Directions

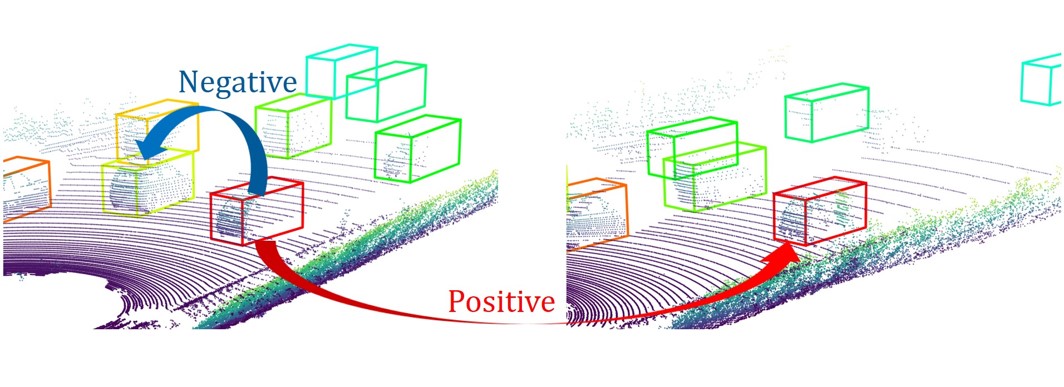

Object tracking

Tracking involves consistently locating an object as it moves across a scene, or consistently locating a point on an object as it moves. In order to understand how robots should interact with objects, the robot must be able to track them as they change in position, viewpoint, lighting, occlusions, and other factors. Improvements in this area should enable autonomous vehicles to interact more safely around dynamic objects (e.g. pedestrians, bicyclists, and other vehicles).

Relevant Publications

|

Self-supervised Cloth Reconstruction via Action-conditioned Cloth Tracking

International Conference on Robotics and Automation (ICRA), 2023

|

|

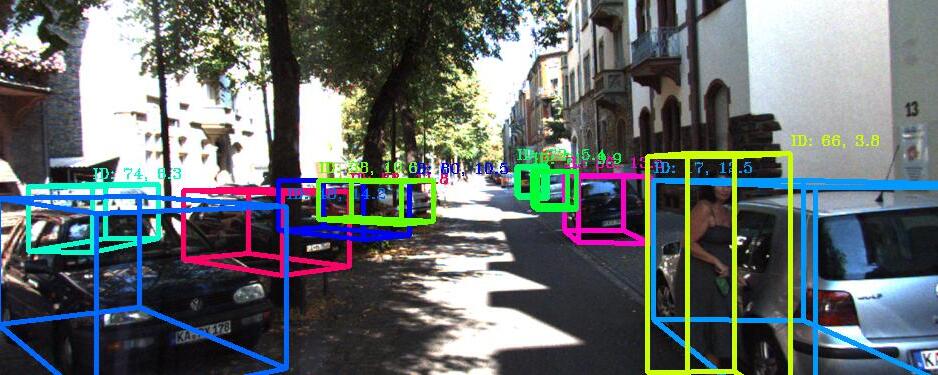

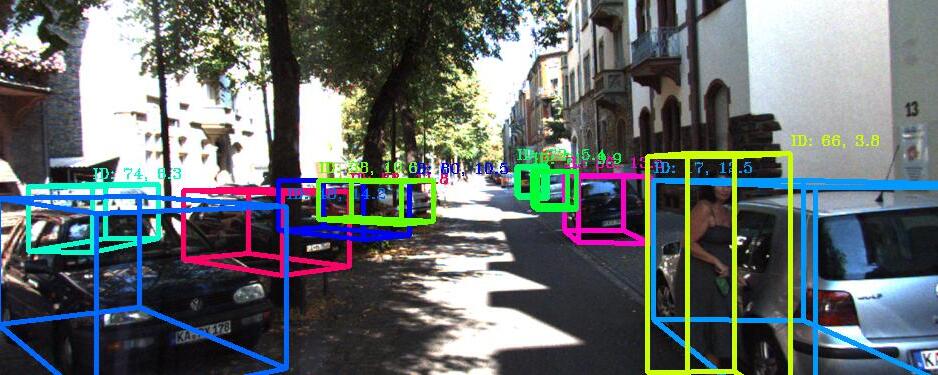

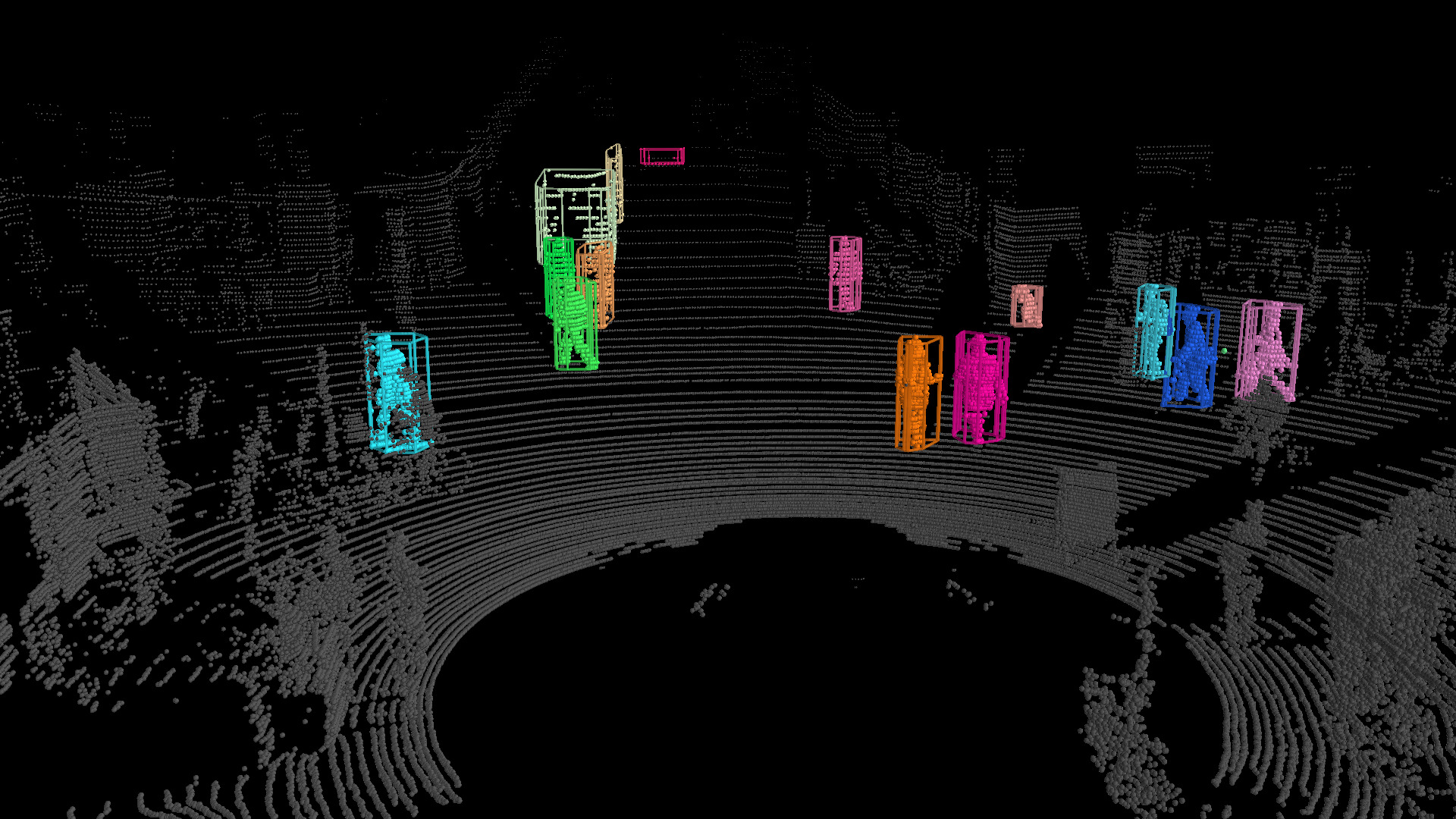

3D Multi-Object Tracking: A Baseline and New Evaluation Metrics

International Conference on Intelligent Robots and Systems (IROS), 2020

|

|

Just Go with the Flow: Self-Supervised Scene Flow Estimation

Conference on Computer Vision and Pattern Recognition (CVPR), 2020 -

|

|

A Probabilistic Framework for Real-time 3D Segmentation using Spatial, Temporal, and Semantic Cues

Robotics: Science and Systems (RSS), 2016

|

Reinforcement Learning Algorithms

Robots can use data, either from the real world or from a simulator, to learn how to perform a task.

This is especially important for tasks which are difficult for robots to achieve via traditional techniques

such as motion planning, such as deformable object manipulation. We have developed novel reinforcement

learning algorithms to more effectively learn from data.”

Relevant Publications

|

HACMan++: Spatially-Grounded Motion Primitives for Manipulation

Robotics: Science and Systems (RSS), 2024

|

|

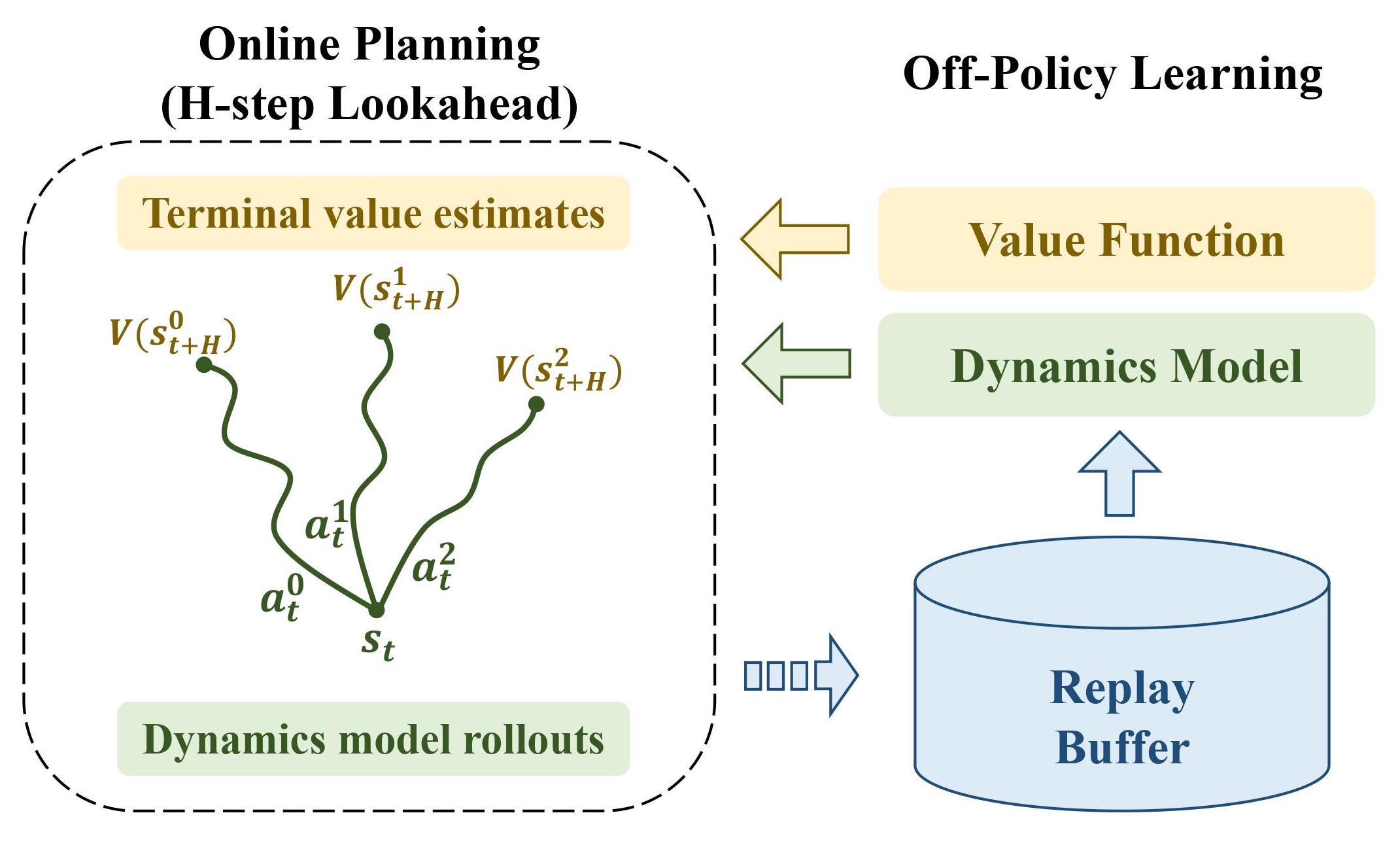

Learning Off-policy for Online Planning

Conference on Robot Learning (CoRL), 2021 -

|

|

PLAS: Latent Action Space for Offline Reinforcement Learning

Conference on Robot Learning (CoRL), 2020 -

|

|

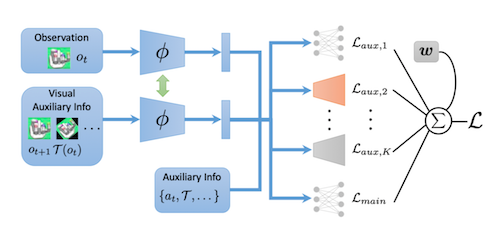

Adaptive Auxiliary Task Weighting for Reinforcement Learning

Neural Information Processing Systems (NeurIPS), 2019

|

|

Automatic Goal Generation for Reinforcement Learning Agents

International Conference on Machine Learning (ICML), 2018

|

|

Reverse Curriculum Generation for Reinforcement Learning

Conference on Robot Learning (CoRL), 2017

|

|

Constrained Policy Optimization

International Conference on Machine Learning (ICML), 2017

|

Autonomous Driving

In the domain of autonomous driving, we have developed novel methods for segmentation, object detection, tracking, and velocity estimation.

Relevant Publications

|

Point Cloud Forecasting as a Proxy for 4D Occupancy Forecasting

Conference on Computer Vision and Pattern Recognition (CVPR), 2023

|

|

Differentiable Raycasting for Self-supervised Occupancy Forecasting

European Conference on Computer Vision (ECCV), 2022

|

|

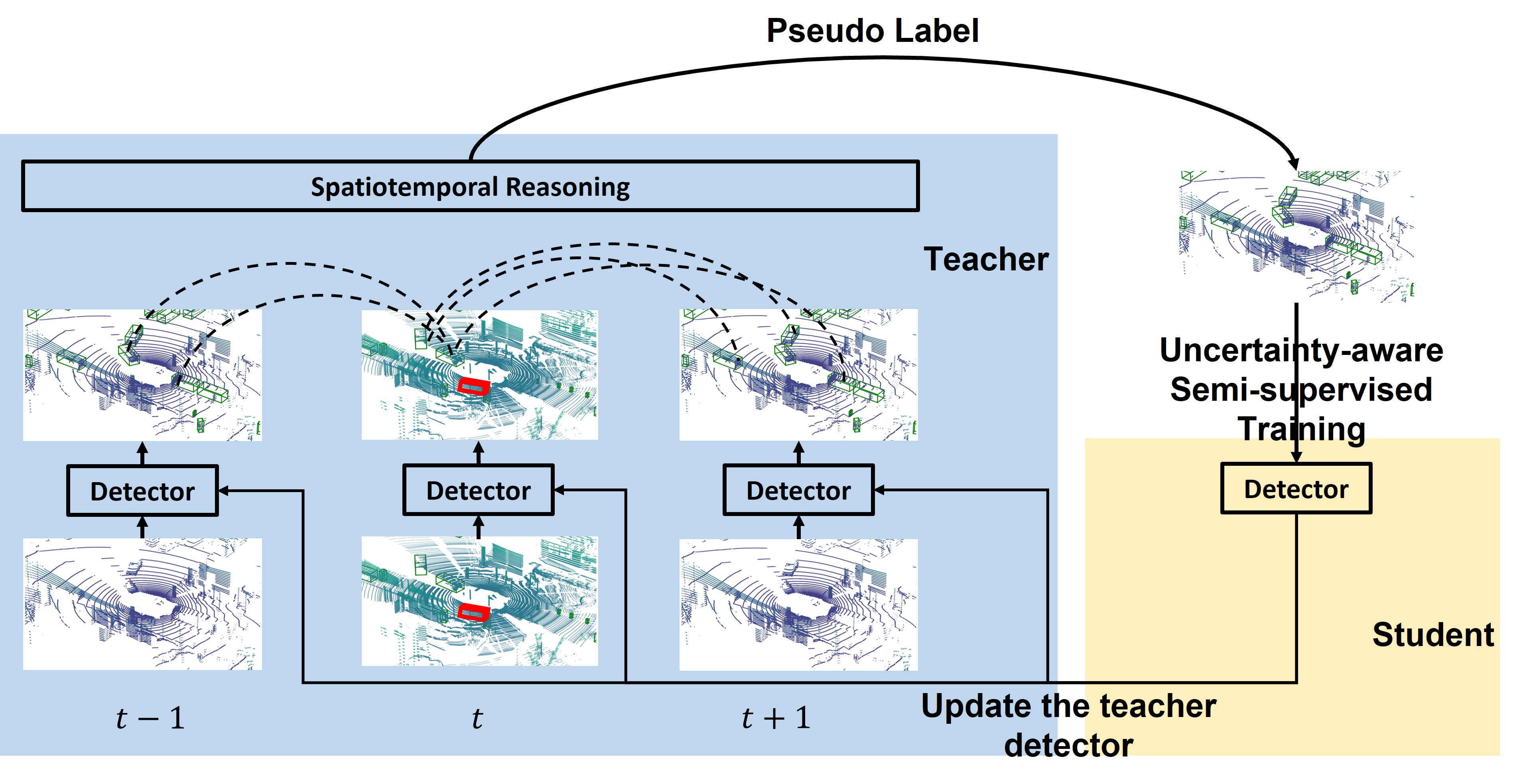

Semi-supervised 3D Object Detection via Temporal Graph Neural Networks

International Conference on 3D Vision (3DV), 2021

|

|

Active Safety Envelopes using Light Curtains with Probabilistic Guarantees

Robotics: Science and Systems (RSS), 2021

|

|

Safe Local Motion Planning with Self-Supervised Freespace Forecasting

Conference on Computer Vision and Pattern Recognition (CVPR), 2021

|

|

Active Perception using Light Curtains for Autonomous Driving

European Conference on Computer Vision (ECCV), 2020 -

|

|

Uncertainty-aware Self-supervised 3D Data Association

International Conference on Intelligent Robots and Systems (IROS), 2020

|

|

3D Multi-Object Tracking: A Baseline and New Evaluation Metrics

International Conference on Intelligent Robots and Systems (IROS), 2020

|

|

Just Go with the Flow: Self-Supervised Scene Flow Estimation

Conference on Computer Vision and Pattern Recognition (CVPR), 2020 -

|

|

What You See is What You Get: Exploiting Visibility for 3D Object Detection

Conference on Computer Vision and Pattern Recognition (CVPR), 2020 -

|

|

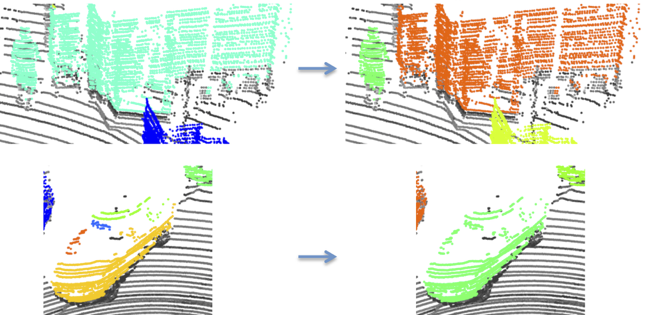

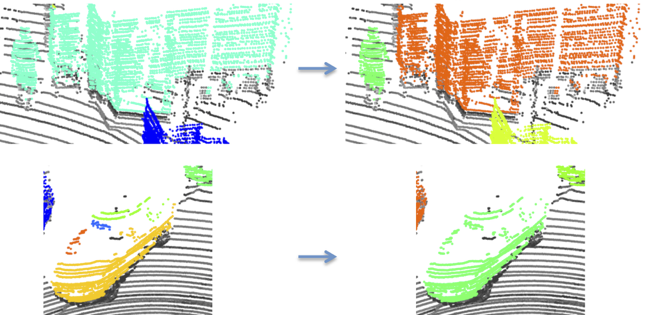

Learning to Optimally Segment Point Clouds

Robotics and Automation Letters (RAL) with presentation at the International Conference of Robotics and Automation (ICRA), 2020

|

|

PCN: Point Completion Network -

International Conference on 3D Vision (3DV), 2018

|

|

A Probabilistic Framework for Real-time 3D Segmentation using Spatial, Temporal, and Semantic Cues

Robotics: Science and Systems (RSS), 2016

|